Resources

This is a link for markdown syntax

This is a link for mdbook - Letax equation reference

LaTeX/Mathematics Wikibooks

How to share local codes with remote codes on github

1. About adding existing projects to GitHub

A project has already been created in local See docs here

-

Create a new repo on github. To avoid errors, do not initialize the new repository with

README, license, or `.gitignore files. You can add these files after your project has been pushed to GitHub. -

Open Terminal and change your working directory to your local folder

-

Initialize the local directory as a Git repository.

git init -b main -

Add the files in your new local repository. This stages them for the first commit.

git add .Commits the tracked changes and prepares them to be pushed to a remote repository. To remove this commit and modify the file, use

git reset --soft HEAD~1and commit and add the file again. -

In Terminal, add the URL for the remote repository where your local repository will be pushed.

$ git remote add origin <REMOTE_URL> # Sets the new remote $ git remote -v # Verifies the new remote URL -

Push the changes in your local repository to GitHub.com.

git push origin mainoriginis usually used for your own remote by convention.

2. Forking workflow

For working in the open source community or collaborating on your own projects

Resources

- https://www.atlassian.com/git/tutorials/comparing-workflows/forking-workflow

- https://gist.github.com/Chaser324/ce0505fbed06b947d962

-

Fork 'official' repo to your own remote github

-

Clone the forked repo from remote to your local system by

git clone <url>Origin is used for your personal remote forked repo by default while running git clone.

-

Add a remote for the 'official' repo

git remote add upstream <url>Upstream is used for the official repository by convention.

-

Working in a branch: making & pushing changes

-

Create a new branch for workflow

git checkout -b new-branch -

Checkout to an existing branch

git checkout some-branch -

keep your fork up to date to the latest 'official' repo

git pull (upstream main)

-

-

Making a pull request

-

push changes to my own remote repo that is accessible to others

git push origin my-branch -

Cleaning work is probably needed before pull request.

-

Rebase your development branch to avoid conflict when new commits have been made to the upstream main branch.

git checkout my-branch git rebase my-main -

Squash several small commits to a more compact one by

git rebase -i my-main

More about

git rebasecan be found here. -

-

Create a "pull request" on github to let project maintainers know and then merge to

upstream main.

-

3. Start from the beginning

Notes from Advanced Git Tutorial | Google IT Automation with Python

Git is a visual control system (VCS), which can save code, configrations, histories, etc.

-

After installing git, the first thing to do is to tell Git who you are by execute command line

git config --global "me@email.com" -

Add new project and repo

$ mkdir project $ cd project # Create a new repo in local $ git init -

Stage changes and commit

$ git status $ git add . $ git commit -m "comments" # A shortcut to stage any changes to tracked files and commit them in one step # only for small changes $ git commit -a -m"message" -

Show changes in commit

# to show change logs $ git log # to show changes with details $ git diff -u # or $ git log -p # shows only unstaged changes by default $ git diff # show changes staged but not commited $ git diff --stanged # review changes before staging them git add -p -

Remove or rename the file in the repo

# remove files from repo, stop the file from being tracked by git $ git rm FILENAME # check out the files in the directory/repo $ ls -l $ ls -al # rename the file $ git rename new_name old_name # create .gitigore in root repo $ touch .gitignore # add files into .gitignore $ echo .idea > .gitignore -

Undo changes before committing

-

Change the file back to previous state has not been staged:

git checkout filename -

Change the file has been staged but not commit, counterpart to

git add:git reset HEAD filename

-

-

Amend commit

- Overwrite previous commit (only works for local repo, not for remote repo):

git commit --amend

- Overwrite previous commit (only works for local repo, not for remote repo):

-

Rollbacks

$ git revert HEAD // HEAD is regarded as a point to a snapshot $ git revert commit_id # identify a commit by commit_id $ git log -p -2

Branch - a pointer to a particular commit

default branch - main (or master in old github)

# check up the current branch

$ git branch

# create a new branch

$ git branch new_branch

# check out the latest snapshot for both files in this branch

$ git checkout new_branch

# create a new branch and switch to it

$ git checkout -b another_branch

# delete the branch

$ git branch -d old_branch

# merge a branch to another

$ git merge another_branch

merge conflict

git log --graph --oneline

git merge -abort - stop merging and back to previous status

4. How to reset and go back to your previous commit

-

First to check out the history change logs and find out which version I want to return back by commit_id:

git lg -

Then go to the log that I want to go by

git reset commit_idBe cautious of using

git reset -hard commit_id -

Lastly, add, commit, and push. If there is a new commit message after

git resetoperation, it will combine the last few commits that you do not want into a single commit.

Java

Java fundamentals

Exceptions

In Java, an exception is an event that disrupts the normal flow of the program 1.

Many methods in Java to read and write files require that exceptions are handled. There are two main approaches: lbyl (look at before you leap) and eafp (easy to ask for forgiveness than permission).

-

Look at before you leap

In the following code, we check if the arguements are valid before operation.

private static int divideLBYL(int x, int y) { if (y != 0) return x / y; else return 0; } -

Easy to ask forgiveness than permission

In this approach, we run the method first and catch the exception if any exception (exception handler).

private static int divideEAFP(int x, int y) { try { return x / y; } catch(ArithmetricException e) { return 0; } }

Checked Exception vs. Unchecked Exception

The Exception Handling in Java is one of the powerful mechanism to handle the runtime errors so that the normal flow of the application can be maintained. The Exception class family in Java is depicted below:

There are basically three types of exceptions: Checked Exception, Unchecked Exception, and Error. Sometimes, Error can be considered as Unchecked Exception.

-

Checked Exceptions: All the subclasses of Exception class except for RuntimeException and its subclasses are checked exceptions. That is, if there is an checked exception in the code, the program won't be compiled if no exception handling.

public class CheckedVsUnchecked { public static void main (String[] args) { readFile("myFile.txt"); } private static void readFile (String fileName) { // will throw a FileNotFoundException, that is checked exception FileReader file = new FileReader(fileName); } }The above code would not compile because fileName may not exist, which will throw a FileNotFoundException (unchecked expcetion). To handle unchecked exception, we can use either try-catch method or

throwsexception in the function signature 2. The difference betweenthrowwithin a method andthrowsin a method signature can be found in this article.public class CheckedVsUnchecked { // it is important to throw an exception in main method as well // in order to catch the exception thrown by readFile method public static void main (String[] args) throws FileNotFoundException { readFile("myFile.txt"); } private static void readFile (String fileName) throws FileNotFoundException { FileReader file = new FileReader(fileName); } } -

Unchekced Exception: The RuntimeException subclass of the Exception class and all its subclasses are unchecked exception. Runtime will not check this type of exception and the program will be compile but may fail. For example,

public class CheckedVsUnchecked { public static void main (String[] args) { String name = null; printLength(name); // will throw NullPointerException even compiled. } private static void printLength (String myString) { System.out.println(myString.length()); } }In this case, it is better to use try-catch method to handle this exception.

Call stack

When throwing an expcetion, Java automatically prints a stack trace, which is showing the call stack. Each thread of execution has its own call stack, and the thread is shown in the first line of the stack call.

try-catch(-finally)

A common way to handle exception is to throw a new exception with some information to indicate where might go wrong. For example,

private static int divide() {

int x, y;

try {

x = getInt(); // a self-defined method that can get input from typing the keyboard.

y = getInt();

return x / y;

} catch (NoSuchElementException e) {

throw new NoSuchElementException("no suitable input");

} catch (ArithmetricException e) {

throw new ArithmetricException("attempt to divide by zero");

}

}

Alternatively, we could catch multiple exception in the main methods, such as

private static void main (String[] args) {

try {

int result = divide();

} catch (ArithmetricException | NoSuchElementException e) { // it is not logical symbol or

System.out.println(e.toString);

System.out.println("Unable to excute, the computer shutting down");

}

}

When Java code throws an exception, the runtime looks up the stack for a method that has a handler (like catch) that can process it. If it finds one, it passes the exception to it. If it doesn't, the program exists.

No matter whether an exception occur in try-block or not, finally will ALWAYS be excuted. For example,

// Java program to demonstrate control flow of try-catch-finally clause

// when exception occur in try block but not handled in catch block

class GFG {

public static void main (String[] args) {

// array of size 4.

int[] arr = new int[4];

try {

int i = arr[4];

// this statement will never execute

// as exception is raised by above statement

System.out.println("Inside try block");

}

// not a appropriate handler so the following statement will also not execute

catch(NullPointerException ex) {

System.out.println("Exception has been caught");

}

finally { // will execute

System.out.println("finally block executed");

}

// rest program will not execute

System.out.println("Outside try-catch-finally clause");

}

}

However, if NullPointerException was replaced by ArrayIndexOutOfBoundsException, the correct exception, the statement in the catch will execute.

Even if there is a return in try block, the finally statement will also be excuted.

private static int printAnumber () {

try {

return 3;

}

catch (Exception e) {

return 4;

}

finally {

return 5;

}

// output: 5, becauase the finally statement will override the above statement.

}

Read and Write File

If we want to make object persist, we need to write object into a file. See an example as below. Remember to close the file after writing. Failing to close streams can really cause problems such as resouce link leak and lcoked file.

Java Thread

What is thread?

In Computer Science, a thread of execution is the smallest sequence of programmed instructions that can be managed independently by a scheduler (a part of operating system). 线程是操作系统能够进行运算调度的最小单位。In most of cases, a thread is a component of a process. The multiple threads of a given process may be executed concurrently (via multithreading capabilities), sharing resources such as memory, while different processes do not share these resources 3. Below is an illustration of relationship between program, process, thread, scheduling, etc.

The following image shows two threads running on one process.

In Java, a thread is a thread of excution in a program, i.e., the direction or path that is taken while a program is being excuted. The thread class extends Object and implements Runnable 4. A thread enables multiple operations to take place within a single method. Each thread in the program often has its own program counter, stack, and local variables.

Creating a Thread

There are two ways to create a new thread of execution. One is to declare a class to be a subclass of Thread. This subclass should override the run method of class Thread. An instance of the subclass can then be allocated and started.

To execute a thread, we need to call the start() function instead of run. The purpose of start() is to create a seperate call stack for the thread. See an example below:

class ThreadTest extends Thread {

@Override

public void run(){

try {

System.out.println("Thread "

+ Thread.currentThread().getId()

+ " is running" )

}

catch (Exception e) {

e.printStackTrace();

}

}

public static void main (String[] args) {

for (int i = 0; i < 8; i ++) {

ThreadTest test = new ThreadTest();

test.run();

}

}

}

output:

Thread 1 is running

Thread 1 is running

Thread 1 is running

Thread 1 is running

Thread 1 is running

Thread 1 is running

Thread 1 is running

Thread 1 is running

Here only Thread 1 is running because of calling run() method directly, and the same call stack is used for all new thread. But if we change test.run() to test.start(), then we will have an output like Thread 10 is running, in which the number is randomly allocated. 5

public class MultiThreads extends Thread {

@Override

public void run() {

for (int i = 0; i < 5; i++) {

System.out.println(i);

}

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

Extending Thread Class

reference

https://www.javatpoint.com/exception-handling-in-java

https://www.youtube.com/watch?v=bCPClyGsVhc

https://en.wikipedia.org/wiki/Thread_(computing)

https://docs.oracle.com/javase/7/docs/api/java/lang/Thread.html

https://www.geeksforgeeks.org/start-function-multithreading-java/

Database

Data Structure

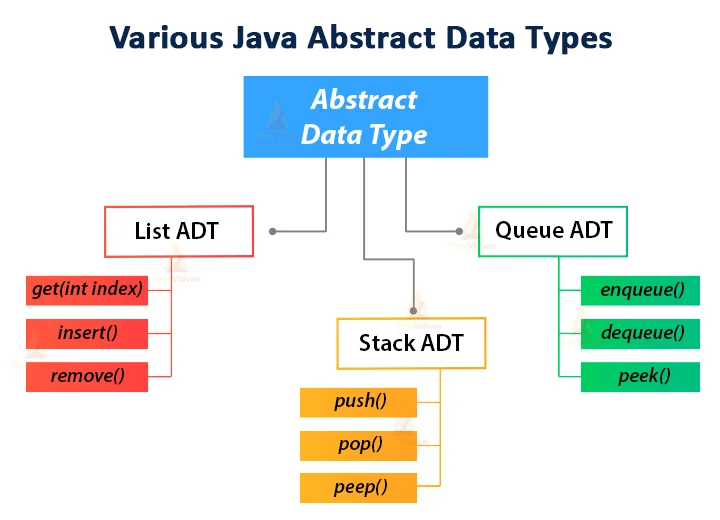

This document outlines the fundamental data structure in Java. The most commonly used data structures in Java include ArrayList, HashMap, Queue, Stack, and BST (Binary Search Tree). These data structures are clearly different but have some relationship. The following scheme exhibits the relationship of these data structures. Notice that some are Interface and some are Class.

Abstract Data Types (ADT) vs. interface

An abstract data type is a self-contained, user-defined type that bundles data with a set of related operations 1. ADT can be classified as built-in and user-defined or as mutable or immutable 2. For example, the List interface is a Java built-in ADT, which defines a data structure with set of methods to operate on but without providing detailed implementation.

My own understanding of ADT is that it is a general concept, and interfaces in Java is in-built ADT for convenience for users.

Implementing an ADT in Java involves two steps. The first step is the definition of a Java Application Programming Interface (API), for interface for short, which describes the names of the methods that the ADT supoorts and how they are to be declared and used. Secondly, we need to define exceptions for any error conditions that can arise during operations 3. Java libraty provides various ADTs such as List, Stack, Queue, Set, Map as inbuilt interfaces that we implement using various data structures.

Collecction

Java Collection interface provides a architecture to store and manipulate a group of objects. The java.util package contains all the classes and interfaces for the Collection framework. The Collection interface is implemented by all the classes in the framework, and it only declares the method that each collection will have.

List

List interface extends Collection interface, which stores a list type data structure in which we can store an ordered collection of objects, and can have duplicate values. List interface is implemented by the classes ArrayList, LinkedList, Vector and Stack.

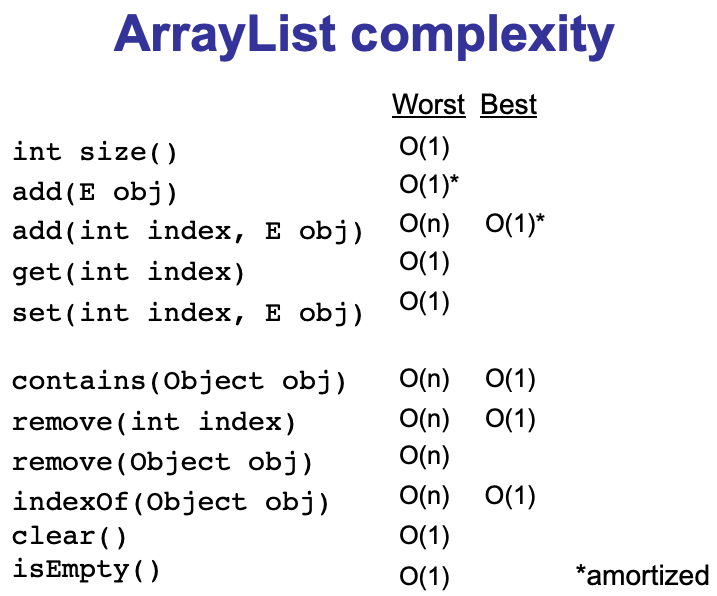

ArrayList

uses a resieable array to store objects, built on top of array. The size, isEmpty, get, set and iterator operations run in constant time, while the add operation runs in amortized constant time. Other operations roughly run in linear time, and the constant factor is low compared to LinkedList.

LinkedList

a linear data structure that consists of nodes holding a data field and a reference to another node. It is a doubly-linked list implementing both List and Deque interface. Some commonly used methods and their corresponding run time complexity are listed below:

-

.add(): add element to the end of the list and run time is constant O(1). -

.get(): get a specific element by traversing nodes one by one, and the worst run time is O(n). -

.remove(element): remove an element with runtime O(n).

In general, except for add, other LInkedList operations run in linear time.

LinkedList implementing Deque interface, which is extended Queue interface, can retrive the first element and remove it from the list, i.e., linkedList.poll() and linkedList.pop(). Also, this linkedlist can also add an element to the head like a stack, i.e., linkedList.push(e).

Stack

a generic, linear data structure that represents a Last-In-First-Out(LIFO) collection of objects. It allows to push/pop element in a constant time. Stack is a direct class of Vector, which is a synchronized implementation. A more complete and consistent set of LIFO stack operations is provided by the Deque interface, which can be implemeneted by ArrayDeque, e.g., Deque<Integer> stack = new ArrayDeque<Integer>().

Stack is also an ADT, and it can be implemented using Array, ArrayDeque and a Generic LinkedList.

Queue

a interface following First-In-First-Our (FIFO) principle typically. Except for priority queue, it order elements according to a supplied comparator or the element's natural ordering. Regardless of ordering, .remove() or .poll()operations will remove an element from the head of the queue (so called dequeue), and new element will be inserted at the tail of the queue (enqueue).

Deque

a linear collection (interface) that supports element insertion and removal at both ends, for example, addFirst() and addLast(). The Deque interface extends Queue.

-

When

Dequeis used as a queue, the collection follows FIFO manner, in which TheaddLast()operation is equivalent toadd()in queue method. -

Dequecan also be used as LIFO stacks, in which insertion and remove will be operated at the beginning of the deque. Thepopandpushoperations will be equivalent toremoveFirstandaddFirst, respectively, in deque.

Unlike the List interface, the Deque interface does not provide support for indexed access to element.

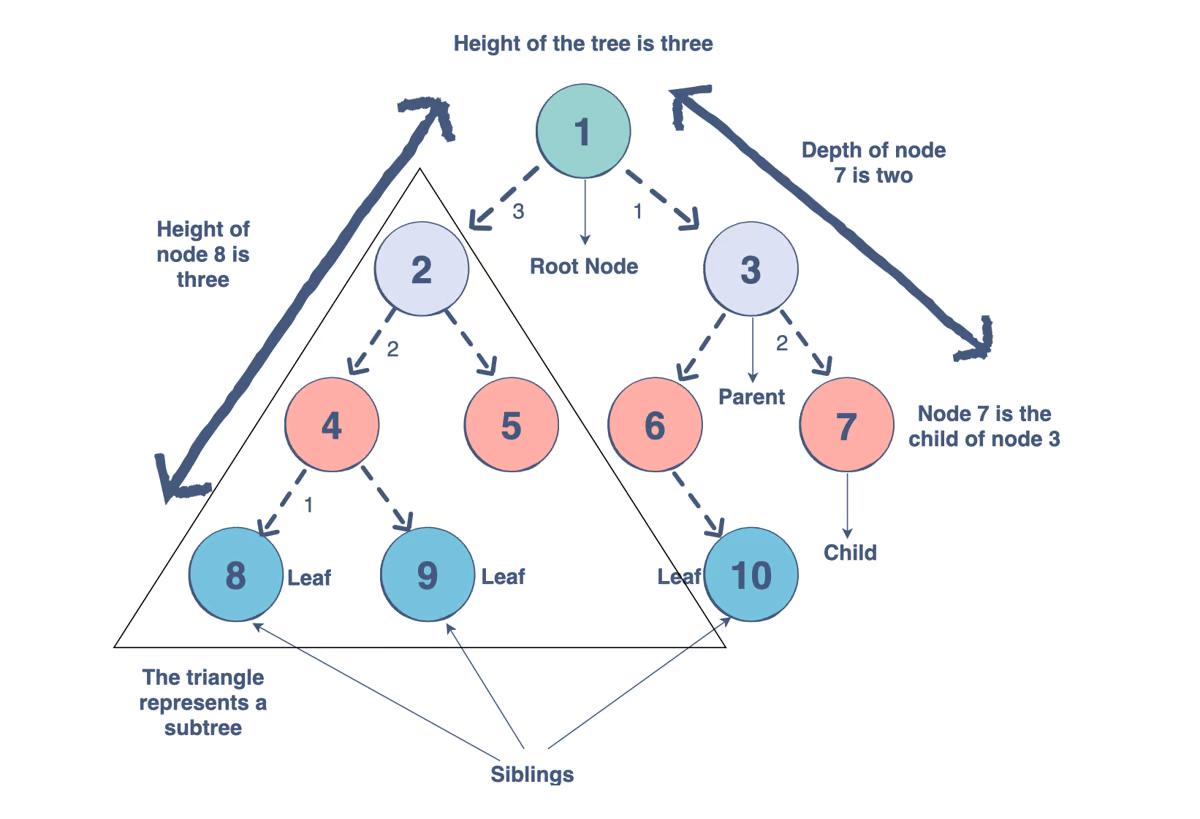

Tree

Tree structure and composition

Tree data structure

All the above mentioned data structures are linear, whereas Tree is a non-linear data structure. Tree is composed of a set of nodes, and each node store data of any types and a node pointing to its child nodes. The components and parameters of a tree is depicted below.

Types of trees: Binary Tree, Binary Search Tree (BST), Red-Black Tree (RBT), 2-3 Tree, 2-3-4 Tree and so on.

Applications with Tree

-

Storing hierarchy information, such file systems

-

Searching: Tree is more efficienct for searching than LinkedList

-

Inheritance: Trees are used for inheritance, XML parser, machine learning, and DNS, amongst many other things.

-

Indexing: Advanced types of trees, like B-Trees and B+ Trees, can be used for indexing a database.

and more ...

Treversal

There are two ways to traverse all nodes in a tree: Depth-First Traversal (DFT, 深度优先游历) and Breadth-First Traversal (BFT, 深度优先游历).

Depth-First Traversal (DFT)

Usually implemented by stack if using iteration.

-

Preorder: visit node, go left, go right

An illustration of the reorder traversal using stack data structure is shown below.

The corresponding codes are:

public static ArrayList<Integer> preOrderTraversalStack(TreeNode<Integer> root) { // create a new ArrayList to store values ArrayList<Integer> li = new ArrayList<>(); if (root == null) return li; // as it is DFT, uses stack Stack<TreeNode<Integer>> stack = new Stack<>(); stack.push(root); while (!stack.isEmpty()) { TreeNode<Integer> node = stack.pop(); // add node value to the list before push child nodes to the stack li.add(node.val); // due to FILO manner, push right child node in order to get the left child node if (node.right != null) stack.push(node.right); if (node.left != null) stack.push(node.left); } return li; }A more general method using Stack and Iteration:

public static List<Integer> preorderTraversalIter2(TreeNode<Integer> root) { List<Integer> list = new ArrayList<>(); Stack<TreeNode<Integer>> stack = new Stack<>(); TreeNode<Integer> node = root; while (node != null || !stack.empty()) { if (node != null) { stack.push(node); // add to the list once traverse on it list.add(node.val); node = node.left; } else { node = stack.pop(); node = node.right; } } return list; }Using recursion to implement preorder traversal:

public static ArrayList<Integer> preOrderTraversalRec(TreeNode<Integer> root) { // create a new ArrayList to store values ArrayList<Integer> li = new ArrayList<>(); if (root == null) return li; helperRecursion(root, li); return li; } private static void helperRecursion(TreeNode<Integer> root, ArrayList<Integer> li) { if (root == null) return; // Step 1: add node value to the list li.add(root.val); // Step 2: go left helperRecursion(root.left, li); // Step 3: go right helperRecursion(root.right, li); } -

Inorder: go left, visit node, go right

An illustration of the inorder traversal using stack data structure is shown below.

The corresponding implementation using stack is as follows:

public static List<Integer> inorderTraversalStack(TreeNode<Integer> root) { List<Integer> list = new ArrayList<>(); Stack<TreeNode<Integer>> stack = new Stack<>(); TreeNode<Integer> currNode = root; while(currNode!=null || !stack.empty()){ // traverse along the left edge to the bottom if (currNode != null) { stack.push(currNode); currNode = currNode.left; } else { // pop each node from the stack and add value to the list currNode = stack.pop(); list.add(currNode.val); // push the right child node if exists currNode = currNode.right; } } return list; }Recursion method:

public static ArrayList<Integer> inOrderTraversalRec(TreeNode<Integer> root) { ArrayList<Integer> li = new ArrayList<>(); helperRecusion(root, li); return li; } private static void helperRecusion(TreeNode<Integer> root, ArrayList<Integer> li) { if (root == null) return; helperRecusion(root.left, li); li.add(root.val); helperRecusion(root.right, li); } -

Postorder: go left, go right, visit node

An illustration of the postorder traversal using stack data structure is shown below.

An example code for implementation of postorder traversal shows as follows

// method 1: Normal iteration public List<Integer> postorderTraversalStack(TreeNode root) { Stack<TreeNode> stack = new Stack<>(); LinkedList<Integer> li = new LinkedList<>(); TreeNode node = root; while (node != null || !stack.isEmpty()) { while (node != null) { stack.push(node); node = node.left; } // unlike inorder traversal, here we only "peek" the node in the stack // as we need to check if it has right child node node = stack.peek(); // if it has, then traverse to the right child node if (node.right != null) { node = node.right; } else { // if it does not have, add this node value to the list node = stack.pop(); li.add(node.val); // check if this node is a right child node // if it is, pop out the node and add the value to the list while (!stack.isEmpty() && node == stack.peek().right) { node = stack.pop(); li.add(node.val); } node = null; } } return li; } // method 2: reverse preorder traversal public static List<Integer> postorderTraversalStackRev(TreeNode<Integer> root) { Stack<TreeNode<Integer>> stack = new Stack<>(); LinkedList<Integer> li = new LinkedList<>(); TreeNode<Integer> node = root; while(node != null || !stack.isEmpty()) { if (node != null) { stack.push(node); // to reverse preorder traversal, // add the value of each node traversed to the head of the list li.addFirst(node.val); // until the right side bottom node = node.right; } else { node = stack.pop(); node = node.left; } } return li; }Recursion method:

public static List<Integer> postorderTraversalRec(TreeNode<Integer> root) { List<Integer> li = new ArrayList<>(); helperRecursion(root, li); return li; } private static void helperRecursion(TreeNode<Integer> root, List<Integer> li) { if (root == null) return; helperRecursion(root.left, li); helperRecursion(root.right, li); li.add(root.val); }

Breadth-First Traversal (BFT)

-

Levelorder traversal: usually uses Queue to implement.

The following example returns a list of node values via BFT using iteractive method.

public static List<Integer> levelOrderStack(TreeNode<Integer> root) { List<Integer> li = new ArrayList<>(); if (root == null) return li; Queue<TreeNode<Integer>> queue = new LinkedList<>(); TreeNode<Integer> node = root; queue.add(node); while (!queue.isEmpty()) { node = queue.poll(); li.add(node.val); if (node.left != null) queue.add(node.left); if (node.right != null) queue.add(node.right); } return li; }Here is another example to re the node values level by level by store the node values in list and append each list to a list of lists. The main difference from the above example is that we add an extra variable

levelto track which level of the node is.public static List<List<Integer>> levelOrderLists(TreeNode<Integer> root) { List<List<Integer>> results = new ArrayList<>(); // a queue to place each node traversed Queue<TreeNode<Integer>> queue = new LinkedList<>(); if (root == null) return results; TreeNode<Integer> node = root; // add a variable to track level queue.add(node); while (!queue.isEmpty()) { List<Integer> li = new ArrayList<>(); int level = queue.size(); // add the value of the nodes in certain level to the corresponding list for (int i = 0; i < level; i++) { node = queue.remove(); li.add(node.val); // if the node has child nodes, then add the child node to the queue and increase the level if (node.left != null) queue.add(node.left); if (node.right != null) queue.add(node.right); } results.add(li); } return results; }The above implementation can also be achieved by recursive approach.

public static List<List<Integer>> levelOrderListsRec(TreeNode<Integer> root) { List<List<Integer>> results = new ArrayList<>(); helperListsRec(root, results, 0); return results; } private static void helperListsRec(TreeNode<Integer> root, List<List<Integer>> results, int level) { if (root == null) return; if (results.size() == level) { results.add(new ArrayList<>()); } results.get(level).add(root.val); helperListsRec(root.left, results, level + 1); helperListsRec(root.right, results, level + 1); }

Time complexity for different data structure

Other application case for Tree data structure

Binary Search Tree

reference

https://stackoverflow.com/a/23653021/15814147.

https://techvidvan.com/tutorials/java-abstract-data-type/#:~:text=What%20is%20an%20Abstract%20Data,of%20operations%20on%20that%20type.

Michael T. Goodrich. Data Structures and Algorithms in Java. 4th Edition. P264

https://java-questions.com/ds-time-complexity.html

Frontend

Vue3 and Javascript

How to create a dynamic router on a page

Vue-router Programmatic Navigation

const userId = '123'

router.push({ name: 'user', params: { userId } }) // -> /user/123

router.push({ path: `/user/${userId}` }) // -> /user/123

// This will NOT work

router.push({ path: '/user', params: { userId } }) // -> /user

In my case,

// In the component of Tombview

methods: {

open: function (userId) {

router.push({name: 'userTomb', params: {userId}})

}

// in the "router.js" file

const routes = [

{name: 'userTomb', path: '/userTomb/:userId', component: userTomb}

]

// : refers to params

// In the new router page

const User = {

template: '<div>User {{ $route.params.id }}</div>'

}

In my case,

{{ $route.params.userId }} // use this code to pass dynamic paramters.

When it is used in

{{ this.$route.params.userId }}

CSS Notes

Usefull link for CSS sstyle Note that you could not change html but only style

1 CSS rules

what this about

<style>

p {

color: blue;

font-size: 20px;

width: 200px;

}

h1 {

color: green;

font-size: 36px;

width: center;

}

</style>

p is a selector;

In the curly braces, there is a declaration, containing property ´´´color´´´and value ´´´blue´´´ zero or more declarations are allowed.

The collection of these CSS rules is what's called a stylesheet.

2 CSS selectors: Element, Class, and ID Selectors

2.1 element selector

<p> ... </p>

2.2 class selector

.blue {

color: blue;

}

In html part:

<p class="blue">...</p>

2.3 id selector

Can only be used once in the HTML document.

#name {

color:blue;

}

<p id="name">...</p>

2.4 grouping selectors

div, .blue{

color: blue;

}

3 Combining Selectors

3.1 Element with Class Selector

//Every p that has a class = "big"

p.big{

font-size: 20 px

}

An example:

<p class="big"> ... </p> // font-size: 20px

<div class="big"> ... </div>

3.2 Child Selector

//every p that is a direct child of article

article > p {

color: blue;

}

<article><p>...</p></article> // only this content has blue text.

...

<p>...</p>

<article><div><p>...</p></div></article>

3.3 Descendant Selector

//every p that is inside (at any level) of article

article p {

color: blue;

}

<article><p>...</p></article> // Blue text

...

<p>...</p> // Unaffected

<article><div><p>...</p></div></article> // Blue text

3.4 Not Limited to element Selector

//every p that is inside (at any level) of element with class = colored""

.colored p {

color: blue;

}

//every element with class = "colored" that is a direct child of article element

article > .colored{

color: blue;

}

3.5 Summary

combining selectors

- Element with class selector: selector.class

- Child(direct) selector: selector>selector

- Descendent selector: selector selector

4 Pseudo-Class Selector

:link

:visited

:hover

:active

:nth-child

Styling links is not exactly as straight forward as styling a regular element, and that's because links have states. And these states can be expressed using our pseudo-classes. An example:

header li {

list-style: none

}

// visited means that HTML allows that after you click a particular link that a different style can be applied to that link than an unclicked link

// In our case, however, we don't want to differentiate between the two, so we'll style them both together.

a:link, a:visited { // <a> tag defines a hyperlink, which is used to link from one page to another.

text-decoration: none;

background-color: green;

border: 1px solid blue;

display: block; // <a> tag is an inline element. Here we change it to a block-level element.

width: 200px;

text-align: center;

margin-bottom: 1px;

}

// An active is that state when the user actually clicks on the element but hasn't yet released his click.

a:hover, a:active {

background-color: red;

color: purple;

}

// the nth child pseudo-selector allows you to target a particular element within a list.

header li:nth-child(3) {

font-size: 24px;

}

// Set every odd member has a gray backgroud.

section div:nth-child(odd) {

background-color: gray

}

// When the cursor hovers on the 4th member, the 4th member change the color to green.

section div:nth-child(4):hover {

background-color: green;

cursor: pointer;

}

5 Style placement

5.1 Head style <style>...</style>

Head styles are usually there override external ones.

5.2 Place style inline

Great for quick testing.

<p style="text-align: center;">...</p> // not recommended

5.3 External CSS stylesheet

Mostly-used one in real sites.

<link rel="stylesheet" href="style.css"

6 Conflict resolution

6.1 Origin Precedence

- when in conflict Simple rule: last declaration wins It is based on the principal that HTML is processed sequentially top to bottom.

- when no conflict Sample rule: declarations merge

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

<title>Cascade of CSS</title>

<link rel="stylesheet" href="external.css">

<style>

p {

color: maroon;

}

</style>

</head>

<body>

<h1>Origin Example</h1>

<p>The rule is simple: last declaration wins.</p> // color: maroon

<p style="color: black;">If there is no conflict, declarations merge into one rule.</p> // color: black

</body>

</html>

In external css stylesheet:

p {

font-size: 130%;

background-color: gray;

color: white;

}

6.2 Inheritance

If you specify some CSS property on some element, all the children and grandchildren and so on and so on of that element will also inherit that property without you having to specify the property for each and every element.

6.3 Specificity

Most specific selector combination wines, which can be evaluated by score:

| 1 | 1 | 1 | 1 |

|---|---|---|---|

| style="..." | id | class, pseudo-class, attribute | # of element |

For example,

div p {color: green;} score = 0002

div #myparag {color: blue;} score = 0101

div.big p {color: green;} score = 0012

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

<title>Inheritance in CSS</title>

<style>

header.navigation p { // score = 0012

color: blue;

}

p.blurb { // score = 0011

color: red;

}

p {

color: green !important; // !important will override over specificity.

}

</style>

</head>

<body>

<header class="navigation">

<p class="blurb">Lorem ipsum dolor sit amet, consectetur adipisicing elit. Vero soluta enim aut! Nihil nam obcaecati, fugiat sint sit libero voluptate eos incidunt odio neque cum, dignissimos aperiam, magnam nisi debitis.</p>

</header>

</body>

</html>

7 Styling Text

.style {

font-family: Arial, Helvetica, sans-serif;

color: #0000ff; // first '00': red; middle '00': green: last '00': blue

font-style: italic;

font-weight: bold;

font-size: 24px;

text-transform: capitalize;

text-align: center;

}

body {

font-size: 120%; // 120 % by default

}

body {

font-size: 120%; // default font = 16px; current font = 19px;

}

<div style="font-size: 2em;"> 2em text // font size is two times the currect font - 38px

<div style="font-size: 2em;"> 4em text // font size = 76px

<div style="font-size: .5em;> 2em again! </div> // font size = 76px

</div>

</div>

8 The Box Model

8.1 box-sizing

The box composes of margin, border, and padding.

box-sizing: border-box; The width refers to the whole box, which is hihgly recommended.

or box-sizing: content-box; The width refers to the content only, the default setting.

However, it should be noted that the box-sizing property does not inherit. To solve the problem, we can use * selector, which can apply the CSS style inside to all the elements.

* {

box-sizing:border-box;

}

8.2 Cumulative Margins

- Horizontal margins are cumulative.

- Vertical magins from two elements will collapse, and larger margin wins.

8.3 Content overflow

overflow: auto

overflow: scroll

overflow: hidden

overflow: invisible

9 Background properties

<body>

<h1>The background property</h1>

<div id="bg">Wolala</div>

</body>

#bg {

width: 500px;

height: 500px;

background-color: blue;

background-image: url('cat.png') // Use an image as a background.

background-repeat: no-repeat // repeat images or not.

background-position: top right // set image position

// or background: url('cat.png') no-repeat right center blue

}

10 Position Elements

10.1 by Floating

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

<title>Two Column Design</title>

<style>

* {

box-sizing: border-box;

}

div {

/*background-color: #00FFFF;*/

}

p {

width: 50%;

/*border: 1px solid black;*/

float: left; // float to the left of the last element.

padding: 10px;

}

#p1 {

/*background-color: #A52A2A;*/

}

#p2 {

/*background-color: #DEB887;*/

}

section {

clear: left;

}

</style>

</head>

<body>

<h1>Two Column Design</h1>

<div>

<p id="p1">Lorem ipsum dolor sit amet, consectetur adipisicing elit. Quia distinctio aliquid cupiditate perferendis fuga, sit quasi alias vero sunt non, ratione earum dolores nihil! Consequuntur pariatur totam incidunt soluta expedita.</p>

<p id="p2">Lorem ipsum dolor sit amet, consectetur adipisicing elit. Dicta beatae voluptatibus veniam placeat iure unde assumenda porro neque voluptate esse sit magnam facilis labore odit, provident a ea! Nulla, minima.Lorem ipsum dolor sit amet, consectetur adipisicing elit. Eius nemo vitae, cupiditate odio magnam reprehenderit esse eum reiciendis repellendus incidunt sequi! Autem, laudantium, accusamus. Doloribus tempora alias minima laborum, provident!</p>

<section>This is regular content continuing after the the paragraph boxes.</section>

</div>

</body>

</html>

10.2 Relative and Absolute Element Positioning

-

Static positioning Normal document flow. Default for all, except html.

-

Relative Positioning Element is positioned relative to its position in normal document flow. Positioning CSS(offset) properties are: top, bottom, left, right. Html positioning is defaulted by relative

-

Absolute Positioning All offsets(top, bottom, left, right) are relative to the position of the nearst ancestor which has positioning set on it, other than static.

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

<title>Positioning Elements</title>

<style>

* {

box-sizing: border-box;

margin: 0;

padding: 0;

}

h1 {

margin-bottom: 15px;

}

div#container {

background-color: #00FFFF;

position: relative;

top: 60px; // equivalent to 'from top'

}

p {

width: 50px;

height: 50px;

border: 1px solid black;

margin-bottom: 15px;

}

#p1 {

background-color: #A52A2A;

position: relative;

top: 65px;

left: 65px;

}

#p2 {

background-color: #DEB887;

}

#p3 {

background-color: #5F9EA0;

position: absolute; // the absolute positioning needs a relative or an absolute parent or an ancestor.

top: 0;

left: 0;

}

#p4 {

background-color: #FF7F50;

}

</style>

</head>

<body>

<h1>Positioning Elements</h1>

<div id="container">

<p id="p1"></p>

<p id="p2"></p>

<p id="p3"></p>

<p id="p4"></p>

</div>

</body>

</html>

11 Media Query Syntax

@media (max-width: 767px){ // media feature (resolves to true or false)

p {

color: blue;

}

Media Query Common Features

@media(max-width: 800px) {...}

@media(max-width: 800px) {...}

@media(orientation: portrait){...}

@media screen{...}

@media print{...}

Media Query Common Logical Operators

-

Devices with width within a range

@media(min-width: 768px) and (max-width: 991px){...} -

Comma is equivalent to OR

@media(max-width: 768px), (min-width: 991px){...}

Media Query Common Approach

p {color: blue;} // base styles

@media(min-witdh: 1200px)

@media(min-width:992px) and (max-width:1199px)

// Be sure that two sizes are not overlapped.

An example for how to use media queries

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

<title>Media Queries</title>

<style>

/********** Base styles **********/

h1 {

margin-bottom: 15px;

}

p {

border: 1px solid black;

margin-bottom: 15px;

}

#p1 {

background-color: #A52A2A;

width: 300px;

height: 300px;

}

#p2 {

background-color: #DEB887;

width: 50px;

height: 50px;

}

/********** Large devices only **********/

@media (min-width: 1200px){

#p1 {

width: 80%;

// p1 at width 1200 pixels or wider will take 80% of our screen

// when it is below 1200px, the p1 will go back to the original size.

}

#p2 {

width: 150px;

height: 150px;

}

}

/********** Medium devices only **********/

@media (min-width: 992px) and (max-width: 1199px){

#p1{

width: 50%;

}

#p2 {

width: 100px;

height: 100px;

}

}

</style>

</head>

<body>

<h1>Media Queries</h1>

<p id="p1"></p>

<p id="p2"></p>

</body>

</html>

Summary

- Basic syntax of a media query ** @media(media feature) ** @media(media feature) logical operator (media feature)

- Remember not to overlap breakpoints

- Usually, you provide base styling. Then change or add to them in each media query.

12 Responsive Design

what is a responsive website? It is a site that's designed to adapt its layout to the viewing environment by using fluid, proportion-based grids, flexible images, and CSS3 media queries. 12 columns grid responsive layout Checkout here for an example.

12.1 Introduction to Bootstrap

Bootstrap is the most popular HTML, CSS and JS framework for developing responsive, mobile first projects on the web. [https://getbootstrap.com/] bootstrap depends on jQuery S0 jQuery also needs to be download.

12.2 Bootstrap grid system

<div class="container"> // your Bootstrap grid always has to be inside of a container wrapper or .container-fluid.

<div class="row"> // The row class also creates a negative margin, to counteract the padding that the container class sets up.

<div class="col-md-4">Col 1</div>

...

</div>

</div>

12.2.1 Column class template

col-SIZE-SPAN

- SIZE screen width range identifier columns will collapes (i.e., stack) below that width, unless another rule applies

- SPAN How many columns element should span values: 1 through 12

<header class="container"> // your Bootstrap grid always has to be inside of a container wrapper.

<nav class="row"> // The row class also creates a negative margin, to counteract the padding that the container class sets up.

<div class="col-md-4">Col 1</div>

...

</nav>

</header>

Python

Python Course (University of Michigan)

https://www.coursera.org/learn/python/home/welcome

I took the course in Feb. 2018 without any coding experience before. However, I do not feel I really get Python because of lack of practice/exercise.

My interests start moving to data analysis recently, and I realized Python is a powerful tool in the world of data analytics. Therefore, I tried to pick up the course again and hopefully I could know it better this time (after I finished CS50 last year)— April 2021

Tips for writing Python (or any other code) Good names for variables Comments - documentation

Converting User Input

# convert floor number from Europe system to US system

inp=input('Europe floor?')

usf=int(inp) + 1

print('US floor', usf)

def abc():

\( \int x dx = \frac{x^2}{2} + C \)

\[ \mu = \frac{1}{N} \sum_{i=0} x_i \\ \int_0^\infty \mathrm{e}^{-x},\mathrm{d}x \] https://en.wikibooks.org/wiki/LaTeX/Mathematics

Learning in a hard way

numpy.array

When creates a numpy.array a = numpy.array([1,2,3]). It is 1-dimensional if not specified. The shape of a can be checked out by a.shape, and it will output (3,).

The number of dimensions will be transformed from 1 to 2 by exploting

-

a1 = a.reshape(1,3), giving an outputarray([[1,2,3]]) -

a2 = a.reshape(3,1), giving an output

array([[1],

[2],

[3]])

array.sum

- array.sum(axis=0): sum up along the column

a1.sum(axis=0)

#output: array([1, 2, 3])

a2.sum(axis=0)

#output: array([6])

- array.sum(axis=1): sum up along the row

a1.sum(axis=1)

#output: array([6])

a2.sum(axis=1)

#output: array([1, 2, 3]) -> 1D array?

a.sum(axis=1) and a.sum(axis=0) give the same output array([6]) because of only one dimension.

Easy coding

- ´x = x + 1´ is qual to ´x += 1´

Virtualenv - virtual environment manager

venv for Python 3 or virtualen for Python 2

Installing packages using pip and virtual environments

This is how I did for my "Energy-data" project:

Copy the following code into "init_py.sh" file

#!/bin/bash

set -e

PYTHON_ENV_NAME=venv

pip3 install virtualenv

# or 'sudo pip3 install virtualenv'

virtualenv -p python3 $PYTHON_ENV_NAME

echo "source $(pwd)/$PYTHON_ENV_NAME/bin/activate" > .env

source $(pwd)/$PYTHON_ENV_NAME/bin/activate # activate the local python environment

pip3 install jupyter

pip3 install matplotlib

pip3 install pandas

pip3 install scipy

pip3 install seaborn

pip3 install graphviz

pip3 install scikit-learn

echo -e "\n"

echo "Please run \"$ source $PYTHON_ENV_NAME/bin/activate\" to switch to the python environment."

echo "Use \"$ deactivate\" anytime to deactivate the local python environment if you want to switch back to your default python."

echo "Or install autoenv as described on project readme file to make your life much easier."

Other easy ways to do

Video source from Corey Schafer

Statistics rewind

Probability - The Science of Uncertainty and Data (2021)

Use the course to re-build my statistics knowledge.

1 Sample Space and Probability

1.1 Sample space - A set of outcomes

-

discrete/finite example

-

continuous example

1.2 Probability Axioms

-

Nonnegativity \(P(A) \geq 0 \)

-

Normalization \( P( \Omega ) = 1 \), \(\Omega \) is the entire sample space.

-

(finite) Additivity: A and B are disjoint, then the probability of their unions satisfies \(P(A \cup B) = P(A) + P(B)\) (to be strengthened later)

1.2.1 Simple consequences of the axioms

-

For a sampe space consist of a finite number of disjointed events, \[ P({s_1, s_2, ...., s_n}) = P(s_1) + P(s_2) + ...... P(s_n) \]

-

\(A \subset B\), then \(P(A) \leq P(B)\)

-

\(P(A \cup B) = P(A) + P(B) - P(A \cap B)\)

-

\(P(A \cup B) \leq P(A) + P(B))\)

1.3 Probability calculations

1.3.1 Uniform Probability Law

-

Discrete example

If the sample space consists of n possible outcomes which are equally likely (i.e., all single-element events have the same probability), \[ P(A) = \frac{\text{number of elements of A}}{n} \]

-

continuous example

probability = area

1.3.2 Discrete but infinite sample space

-

Sample space: {1, 2, 3 ....}

Given \(P(n) = \frac{1}{2^n}\), n = 1, 2, 3....

As \( P(\Omega) = 1 \): \(\frac{1}{2} + \frac{1}{4} + ....= \sum\limits_{n=1}^\infty \frac{1}{2^n} = \frac{1}{2}\sum\limits_{n=0}^\infty \frac{1}{2^n} = \frac{1}{2}\frac{1}{1-1/2} = 1\)

1.3.3 Countable aditivity axiom

Additivity holds only for "countable" sequences of events

If \(A_1, A_2, A_3 ...\) is an \(\underline{\text{infinite sequence of disjoined events}}\),

\[ P(A_1 \cup A_2 ......) = P(A_1) + P(A_2) + ...... \]

1.4 Mathematical background

1.4.1 Sets - A collection of distinc elements

-

finite: e.g. {a, b, c, d}

-

infinite: the reals (R)

-

\( \Omega \) - the universal set

-

Ø - empty set

What are reals?

The reals include rational numbers (terminating decimals and non-terminating recurring decimals and irrational numbers (non-terminating non-reccuring decimals

1.4.2 Unions and intersection

1.4.3 De Morgans' Law

-

\( (S \cap T)^c = S^c \cup T^c \) and \( (S \cup T)^c = S^c \cap T^c \)

-

\( (S^c \cap T^c)^c = S \cup T \)

1.4.4 Other important mathematical backgrounds

-

Sequences and their limits

squence: an enumerated collection of objects

-

When does a sequence converge

-

if \(a_i \leq a_{i+1}\)

-

the sequence "converge to \(\infty\)"

-

the sequence converge to some real number a

-

-

if \(|a_i - a| \leq b\), for \(b_i \to 0\), then \(a_i \to a\)

-

-

Infinite series

series(infinte sums) vs. summation(finite sums)

\(\sum\limits_{n=1}^\infty a_i = \lim\limits_{n\to\infty}\sum\limits_{i=1}^n a_i\)

-

\(a_i \leq 0\): limit exists

-

if term \(a_i\) do not all have the same sign:

a. limit does not exist

b. limit may exist but be different if we sum in a different order

c. Fact: limit exists and independent of order of summation if \(\sum\limits_{n=1}^\infty |a_i| \leq \infty\)

-

-

Geometric series (等比数列、等比级数)

\(\sum\limits_{i=0}^\infty a^i = 1 + a + a^2 + ...... = \frac{1}{1-a} \text{ |a| < 1} \)

1.4 Sets

1.4.1 Countable and uncountable infinite sets

-

Countable

-

integers, pairs of positive integers, etc.

-

rational numbers q (有理数), with 0 < q < 1

-

-

Uncountable - continuous numbers

-

the interval [0, 1]

-

the reals, the plane, etc.

How to prove the reals are uncountable - "Control's diagonalization argument"

-

Unit 2 Conditioning and independence

Refer to Section 1.3 - 1.5 in the textbook

2.1 Conditional and Bayes' Rules

2.1.1 The definition of conditional probability

P(A|B) = "probability of A, given that B occurred"

\[ P(A|B) = \frac{P(A \cap B )}{P(B)} \]

defined only when P(B) > 0

2.1.2 Conditional probabilities share properties of ordinary probabilities

-

\(P(A|B) \geq 0\)

-

\(P(\Omega|B) = 1\)

-

\(P(B|B) < 0\)

-

If \(A \cap C = Ø\), then \(P(A \cup C|B) = P(A|B) + P(C|B)\) also only applies to countable and finite sequence (countable additivity axioms).

2.1.3 Models base on conditional probabilities

1. The multiplication rule

\\(P(A \cap B) = P(B)P(A|B) = P(A)P(B|A)\\)

\\(P(A^c \cap B \cap C^c) = P(A^c \cap B) P(C^c|A^c \cap B) = P(A^c) P(B|A^c) P(C^c|A^c \cap B)\\)

\\(P(A_1 \cap A_2...\cap A_n) = P(A_1) \prod\limits_{i=2}^n P(A_i|A_1 \cap A_2...\cap A_i)\\)

2. Total probability theorem

3. Bayes' rules

2.2 Independence

2.2.1 Conditional independence

Independent of two events

-

Intuitive "definition": P(B|A) = P(B)

- Occurence of A provides no new information about B

Definition of independence:

\(P(A \cap B) = P(A) \times P(B)\)

whether two events disjoined or joined is not associated with independence

Independent of events complements

If A and B are independent, then A and \(B^c\) are independent.

Independent of events complements

Conditioning may affect independence

2.2.2 Independence of a collection of events

-

Intuitive "definition": Information on some of the events does not change probabilities related to the remaining events

-

Definition: Events \(A_1, A_2,....., A_n\) are called independent if:

\(P(A_i \cap A_j \cap .... \cap A_m) = P(A_i)P(A_j)...P(A_m)\)

Pairwise independence

n = 3:

\(P(A_1 \cap A_2) = P(A_1)P(A_2)\)

\(P(A_1 \cap A_3) = P(A_1)P(A_3)\)

\(P(A_2 \cap A_3) = P(A_2)P(A_3)\)

vs. 3-way indenpendence

\(P(A_1 \cap A_2 \cap A_3) = P(A_1)P(A_2)P(A_3)\)

Independence vs. pairwise independence

2.2.3 Reliability

Unit 3 Couting

3.1 Basic counting principle

r stages and \(n_i\) choices at stage i give the total number of possible choices \( n_1 * n_2 * ....n_r \)

3.2 Permutation

- Permutation - number of ways of ordering n elements (repetition is prohibited)

\[n * (n-1) * (n-2) * ... * 2 * 1 = n!\]

- Number of subsets of {1, 2, ...n} = \(2^n\)

3.3 Combinations

-

combinations \(\binom{n}{k}\)- number of k-element subsets of a given n-element set

How is combination equation derived?

Two ways of constructing an ordered sequence of k distinct items:

-

choose the k items one at a time:

\[ n (n-1) ... (n-k+1) = \frac{n!}{k!(n-k)!} \]

-

choose k items, then order them:

\[ \left( \begin{array}{c} n \\ k \end{array} \right)k! \]

There we have \[ \left( \begin{array}{c} n \\ k \end{array} \right) = \frac{n!}{k!(n-k)!} \]

-

3.3 Binominal coeffficient

-

Binominal coeffficient \(\binom{n}{k}\) - Binomial probabilities

Toss coins n times and each toss is given independent, P(Head) = p

\[ P(\text{k heads}) = \binom{n}{k}p^k (1-p)^{n-k} \]

If asking P(k heads without ordered), then

\[ P(\text{k heads}) = p^k (1-p)^{n-k} \]

Therefore, \(\binom{n}{k}\) is the number of k-head sequence

3.4 Partitions

-

multinomial coeffecient (number of partitions) =

\[ \frac{n!}{n_1! n_2! ... n_r!} \]

If r = 2, then \(n_1 = k\) and \(n_2 = n - k\). There is \(\frac{n!}{n! (n-k)!}\) which is \(\binom{n}{k}\)

- A simple example

4 Discrete random variables

4.1 Probability mass function (PMF)

Random variable(r.v.): a function from the sample space to the real numbers, notated as X.

PMF: probability distribution of X

\[ p_X(x) = P(X = x) = P({w \in \Omega, s.t. X(\omega) = x}) \]

4.2 Discrete Random variable examples

4.2.1 Bernoulli random variables

with parameter \(p \in [0,1]\)

\[ p_X(x) = \begin{cases} 1, p(x) = p \\ 0, p(x) = 1 - p \end{cases} \]

-

Models a trial that results in either success/failure, Heads/Tails, etc.

-

Indicator random variables of an event A, \(I_A\) iff A occurs

4.2.2 Uniform random variables

with paramters a,b

-

Experiment: pick one of a, a+1 .... b at a random; all equally likely

-

Sample space; {a, a + 1, .... b}

-

Random variables X: \(X(\omega) = \omega\)

4.2.3 Binomial random variables

with parameters: pasitive integer \(n; p \in [0,1]\)

-

Experiment: n independent toses of a coin with P(Heads) = p

-

Sample space: set of sequences of H and T of length n

-

Random variables X: number of Heads observed

-

Model of: number of successes in a given number of independent trials

\[ p_X(k) = \left(\begin{array}{c} n \\ k \end{array} \right)p^k(1-p)^{n-k}, k = 0, 1 ..., n \]

4.2.4 Geometric random variables

with parameter p: 0 < p ≤ 1

-

Experiment: infinitely many independent tosses of a coin: P(Heads) = p

-

Random variable X: number of tosses until the first Heads

-

Model of waiting times; number of tirals until a success

\[

p_X(k) = P(X = k) = P(T...TH) =(1-p)^{k-1}p, k = 1,2,3...

\]

4.3 Expectation/mean of a random variable

-

Definition:

\[ E[X] = \sum\limits_{x} xp_X(x) \]

-

Interpretation: average in large number of independet repetitions of the experiment

-

Elementary properties

-

If X ≥ 0, then E(X) ≥ 0

-

If a ≤ X ≤ b, then a ≤ E[X] ≤ b

-

If c is a constant, E[c] = c

-

The expected value rule:

\[ E[Y] = \sum\limits_y yp_Y(y) = E[g(X)] = \sum\limits_x g(x)p_X(x)

\] -

Linearity of expectation: \(E[aX+b] = aE[X] + b\)

-

4.4 Variance - a measure of the spread of a PMF

4.4.1 Definition of variance:

\[ var(X) = E[(X - \mu)^2] = \sum\limits_x (x - \mu)^2 p_X(x) \]

standard deviation: \(\sigma_X = \sqrt{var(X)}\)

4.4.2 Properties of the variance

-

Notation: \(\mu = E[X] \)

-

\(var(aX + b) = a^2var(X)\)

-

A useful formula:

\[ var(X) = E(X^2) - (E[X])^2

\]

Summary of Expectation and Variance of Discrete Random Variables

| Random Variables | Formula | E(X) | var(X) |

|---|---|---|---|

| Bernoulli (p) | \(p_X(x) = \begin{cases} 1, p(x) = p \\ 0, p(x) = 1 - p \end{cases} \) | \(p\) | \(p(1-p)\) |

| Uniform (a,b) | \(p_X(x) = \frac{1}{b-a}, a ≤ x ≤ b\) | \(\frac{a+b}{2}\) | \(\frac{1}{12}(b-a)(b-a-2)\) |

| Binomial \(p \in [0,1]\) | \(p_X(k) = \left(\begin{array}{c} n \\ k \end{array} \right)p^k(1-p)^{n-k}, k = 0, 1 ..., n\) | \( np \) | \(np(1-p)\) |

| Geometric \(0 < p ≤ 1\) | \(p_X(k) = (1-p)^{k-1}p, k = 1,2,3.... \) | \(\frac{1}{p}\) | \(\) |

4.5 Conditional PMF and expectation, given an event

4.5.1 Conditional PMFs

\(p_{X|A}(x|A) = P(X = x|A)\), given A = {Y = y}

\[

p_{X|Y}(x|y) = \frac{p_{X,Y}(x,y)}{p_Y(y)}

\]

4.5.2 Conditional PMFs involing more than two random variables

-

\(p_{X|Y,Z}(x|y,z) = P(X = x|Y = y, Z = z) = \frac{P(X=x,Y=y,Z=z)}{P(Y=y, Z=z)} = \frac{P_{X,Y,Z}(x,y,z)}{P_{Y,Z}(y,z)} \)

-

Multiplication rules: \(p_{X,Y,Z}(x,y,z) = p_X(x)p_{Y|X}(y|x)p_{Z|X,Y}(z|x,y) \)

-

Total probability and expectation theorems

\(p_X(x) = P(A_1)p_{X|A_1}(x) + ... + P(A_n)p_{X|A_n}(x) \implies p_X(x) = \sum\limits_y p_Y(y)p_{X|Y}(x|y)\)

\(E[X] = P(A_1)E[X|A_1] + ... + P(A_n)E[X|A_n] \implies E[X] = \sum\limits_y p_Y(y) E[X|Y = y]\)

4.6 Multiple random variables and joint PMFs

4.6.1 Joint PMF

\[ p_{X,Y}(x,y) = P(X = x, Y =y) \]

-

\(\sum\limits_x \sum\limits_y p_{X,Y}(x,y) = 1\)

-

Marginal PMFs: \(p_X(x) = \sum\limits_y p_{X,Y}(x,y)\)

\(p_Y(y) = \sum\limits_x p_{X,Y}(x,y)\)

4.6.2 Functions of multiple random variables

\(Z = g(X,Y)\)

-

PMF: \(p_Z(z) = P(Z=z) =P(g(X,Y) = z) \)

-

Expected value rules: \(E[g(X,Y)] = \sum\limits_x \sum\limits_y g(x,y) p_{X,Y}(x,y)\)

-

Linearity of expectations

-

\(E[aX + b] = aE[X] + b\)

-

\(E[X + Y] = E[X] + E[Y]\)

-

4.6.3 Independence of multiple random variables

-

\(P(X = x and Y = y) = P(X = x) \times P(Y = y), for all x, y \)

-

\(P_{X|Y}(x|y) = P_X(x)\) and \(P_{Y|X}(y|x) = P_Y(y)\)

-

Independence and expectations

-

In general, \(E[g(X,Y)] \neq g(E[X], E[Y])\)

-

If X, Y are independent: \(E[XY] = E[X]E[Y]\)

g(X) and h(Y) are also independent: \(E[g(X)h(Y)] = E[g(X)]E[h(Y)]\)

-

-

Independence and variances

-

Always true: \(var(aX) = a^2var(X)\) and \(var(X+a) = var(X)\)

-

In general: \(var(X+Y) \neq var(X) + var(Y)\)

-

If X, Y are independent, \(var(X,Y) = var(X) + var(Y)\)

-

5 Continuous random variables

5.1 Probability density function (PDFs)

5.1.1 Definition

PDFs are not probabilities. Their units are probability per unit length.

Contiunous random variables: a random variable is continuous if it can be described by a PDF.

-

\(P(X = a) = 0\)

-

\(f_X(x) \geq 0\)

-

\(\int_{-\infty}^{+\infty}f(x)dx = 1\)

Expectation/Mean

Expection/mean of a continuous random variable: average in large number of independent repetitions of the experiment

\[ E[X] = \int_{-\infty}^{+\infty}xf_X(x)dx \]

Properties of expectations

-

if X ≥ 0, then \(E[X] ≥ 0\)

-

if a ≤ X ≤ b, then \(a ≤ E[X] ≤ b\)

-

Expected value rule: \(E[g(X)] = \sum\limits_{x} g(x) f_X(x) dx \)

-

Linearity: \(E[aX + b] = aE(X) + b\)

Variance

According to the definition of variance: \(var(X) = E[(X - \mu)^2] \)

\[ var(X) = \int_{-\infty}^{+\infty} (x - \mu)^2 f_X(x) dx \]

-

Standard deviation = \(\sigma_X = \sqrt{var(X)} \)

-

\(var(aX + b) = a^2 var(X)\)

-

\(var(X) = E[X^2] - (E[X])^2\)

Summary of Expectation and Variance of continuous random variables

| Random Variables | Formula | E(X) | var(X) |

|---|---|---|---|

| Uniform | \(f(x) = \frac{1}{b-a}, a ≤ x ≤ b\) | \(\frac{a+b}{2}\) | \(\frac{(b-a)^2}{12}\) |

| Exponential \( \lambda > 0 \) | \(f(x) = \begin{cases} \lambda e^{-\lambda x}, x ≥ 0 \\ 0, x < 0 \end{cases}\) | \(\frac{1}{\lambda}\) | \(\frac{1}{\lambda^2}\) |

5.1.2 Cumulative distribution functions (CDF)

CDF defination: \(F_X(x) = P(X ≤ x )\)

-

Non-decreasing

-

\(F_X(x)\) tends to 1, as \(x \to \infty\)

-

\(F_X(x)\) tends to 0, as \(x \to - \infty\)

5.1.3 Normal(Gaussian) random variables

-

Standard normal(Gaussian) random variables

Stardard normal \(N(0,1): f_X(x) = \frac{1}{\sqrt{2\pi}} e^{-x^2/2} \)

-

\(E[X] = 0\)

-

\(var(X) = 1\)

-

-

General normal(Gaussian) random variables

General normal \(N(\mu,\sigma^2): f_X(x) = \frac{1}{\sigma\sqrt{2\pi}} e^{-(x-\mu)^2/2\sigma^2}, \sigma > 0 \)

-

\(E[X] = \mu \)

-

\( var(X) = \sigma^2 \)

\\( \sigma^2 \to small\\), the shape of normal distribution becomes more narrow. -

-

Linear functions of a normal random variable

-

Let \(Y = aX + b, X \sim N(\mu, \sigma^2)\)

\(E[Y] = a\mu + b\)

\(Var(Y) = a^2 \sigma^2 \)

-

Fact: \(Y \sim N(a\mu + b, a^2 \sigma^2)\)

-

Special case: a = 0. There is Y = b, \(N(b, 0)\)

-

5.1.4 Calculation of normal probabilities

-

Standard normal tables

\(\Phi(y) = F_Y(y) = P(Y \leq y)\) which can be find in the table, where y ≥ 0.

-

Standardizing a random variable

\(X \sim N(\mu, \sigma^2), \sigma^2 > 0 \)

\(Y = \frac{X - \mu}{\sigma}\)

5.2 Conditioning on an event: multiple continuous r.v.'s

\[ P( X \in B|A) = \int_B f_{X|A}(x)dx \]

5.2.1 Conditional PDf of X, given that \(X \in A \)

\[ f_{X|X \in A}(x) = \begin{cases} 0, if x \notin A \\ \frac{f_X(x)}{P(A)}, if x \in A \end{cases} \]

5.2.2 Conditional expectation of X, given an event

5.2.3 Memorylessness of the exponential PDF

5.2.4 Total probability and expectation theorems

- Probability theorem:

\[ P(B) = P(A_1)P(B|A_1) + \dotsb + P(A_n)P(B|A_n) \]

- For the discrete random variable:

\[ p_X(x) = P(A_1)p_{X|A_1}(x) + \dotsb + P(A_n)p_{X|A_n}(x) \]

- For CDF:

\[ F_X(x) = P(X \leq x) = P(A_1)P(X \leq x | A_1) + \dotsb + P(A_n)P(X \leq x | A_n) \\= P(A_1)F_{X|A_1}(x) + \dotsb + P(A_n)F_{X|A_n}(x) \]

- For PDF, the derivative of CDF:

\[ f_X(x) = P(X \leq x) = P(A_1)f_{X|A_1}(x) + \dotsb + P(A_n)f_{X|A_n}(x) \]

- Integral above equation, we will obtain the expectation equation:

\[ \int xf_X(x)dx = P(A_1) \int xf_{X|A_1}(x)dx + \dotsb + P(A_n) \int xf_{X|A_n}(x)dx \]

\[

E[X] = P(A_1)E[X|A_1] + \dotsb + P(A_n)E[X|A_n]

\]

5.3 Mixed random varibles

5.3.1 Mixed distirbutions

\[

X = \begin{cases} Y, \text{with probability } p \text{ (Y discrete)}\\ Z, \text{with probability } 1-p \text{ (Z continuous)} \end{cases}

\]

-

do not have PDF or PMF but can be defined with CDF and expectation

\[ F_X(x) = p P(Y \leq x) + (1-p) P(Z \leq x) \\ =pF_Y(x) + (1-p)F_Z(x) \\ = E[X] = p E[Y] + (1-p) E[Z] \]

5.3.2 Joint PDFs

-

Joint PDFs are denoted as \(f_{X,Y}(x,y)\): probaility per unit area

When X = Y, equal to a line, meaning X and Y are not joint PDFs.

5.3.3 From the joint to the marginal

5.3.4 Joint CDF

\[ F_{X,Y}(x,y) = P(X \leq x, Y \leq y) = \int\limits_{-\infty}^{y} \int\limits_{-\infty}^{x} f_{x,y}(s,t)dsdt \]

5.4 Conditioning on a random variable and Bayers rule

5.4.1 Conditional PDFs, given another r.v.

-

\(f_{X|Y}(x|y) = \frac{f_{X,Y}(x,y)}{f_Y(y)}\), if \(f_y(y) > 0\)

-

\(f_{X|Y}(x|y) \geq 0\)

-

Think of value of Y as fixed at some y shape of \(f_{X|Y}(\cdot|y)\): slice of the joint

-

multiplication rule:

\[ f_{X|Y}(x,y) = f_Y(y) \cdot f_{X|Y}(x|y)

\]

-

-

\(P(X \in A | Y = y) = \int_A f_{X|Y}(x/y)dx\)

5.4.2 Total probability and expectation theorems

-

Anolog to the PMFs of discrete randome varable \(p_X(x) = \sum\limits_y p_Y(y)p_{X|Y}(x|y)\)

For continuous r.v., there is

\[ f_X(x) = \sum_{-\infty}^{\infty} f_Y(y)f_{X|Y}(x|y)dy

\] -

Anolog to the Expectation of discrete randome varable \(E[X|Y=y] = \sum\limits_x x p_{X|Y}(x|y)\)

For continuous r.v., there is

\[ E[X|Y=y] = \int_{-\infty}^{\infty} xf_{X|Y}(x|y)dx

\] -

Anolog to the discrete randome varable \(E[X] = \sum\limits_y p_Y(y) E[X|Y=y]\)

For continuous r.v., there is

\[

E[X] = \int_{-\infty}^{\infty} f_Y(y)E[X|Y=y]dy

\\ = \int_{-\infty}^{\infty} xf_X(x)dx

\] -

Expected value rule

\[ E[g(X)|Y=y] = \int_{-\infty}^{\infty} g(x)f_{X|Y}(x|y)dx

\]

5.4.3 Independence

\[

f_{X,Y}(x,y) = f_X(x)f_Y(y), for all x and y

\]

-

\(f_{X,Y}(x,y) = f_X(x)\), for all y with \(f_Y(y) > 0\) and all x

-

If X, Y are independent:

\[ E[XY] = E[X]E[Y] \\ var(X + Y) = var(X) + var(Y) \]

g(X) and h(Y) are also independent: \(E[g(X)h(Y)] = E[g(X)] \cdot E[h(Y)]\)

5.4.4 The Bayes rule --- a theme with variations

-

For discrete r.v.,

-

\(p_{X|Y}(x|y) = \frac{p_X(x) p_{Y|X}(y|x)}{p_Y(y)}\)

-

\(p_Y(y) = \sum\limits_{x'} p_X(x')p_{Y|X}(y|x')\)

-

-

For continuous r.v.,

-

\(f_{X|Y}(x|y) = \frac{f_X(x) f_{Y|X}(y|x)}{_Y(y)}\)

-

\(p_Y(y) = \int\limits f_X(x')f_{Y|X}(y|x')\)

-

-

One discrete and one continuous r.v.

Unit 6 Further topics on random variables

6.1 Derived distributions

6.1.1 A linear function \(Y = aX + b\)

-

Discrete r.v.

\( p_Y(y) = p_X(\frac{y-b}{a}) \)

-

Continuous r.v.

\( f_Y(y) = \frac{1}{|a|}f_X(\frac{y-b}{a}) \)

-

A linear function of normal r.v. is normal

If \(X \sim N(\mu, \sigma^2)\), then \(aX + b \sim N(a\mu + b, a^2\sigma^2)\)

-

6.1.2 A general function \(g(X)\) of a continuous r.v.

Two-step procedure:

-

Find the CDF of Y: \(F_Y(y) = P(Y \leq y) = P(g(x) \leq y)\) and the valid range of y

-

Differentiate: \(f_Y(y) = \frac{dF_Y(y)}{dy}\)

-

A general formula for the PDF of \(Y = g(X)\) when g is monotomic

\[ f_Y(y) = f_X(h(y))\left|\frac{dh(y)}{dy}\right|

\]\(x = h(y)\) is the inverse function of \(y = g(x)\)

-

A nonmonotonic example \(Y = X^2\)

-

the discrete case: \(p_Y(y) = p_X(\sqrt{y}) + p_X(-\sqrt{y})\)

-

the continuous case: \(f_Y(y) = f_X(\sqrt{y})\frac{1}{2\sqrt{y}} + p_X(-\sqrt{y})\frac{1}{2\sqrt{y}}\)

-

-

A function of multiple r.v.'s: \(Z = g(X,Y)\)

6.2 Sums of independent vadom variables

6.2.1 The distribution of \(X + Y\): the discrete case

Z = X + Y; X,Y independent, discrete known PMFs

\[

p_Z(z) = \sum\limits_x p_X(x)p_Y(z-x)

\]

Dsicrete convoltion mechanics

-

Flip the PMF of Y and put it underneath the PMF of X

-

Shift the flipped PMF by z

-

Cross-multiply and add

6.2.2 The distribution of \(X + Y\): the continous case

Z = X + Y; X,Y independent, continuous known PDFs

\[

f_Z(z) = \int\limits_x f_X(x)f_Y(z-x)dx

\]

-

conditional on \(X = x\):

\[ f_{Z|x}(z|x) = f_Y(z-x)

\]which can then be used to calculate Joint PDF of Z and X and marginal PDF of Z.

-

Same mechanics as in discrete case

6.2.3 The sum of independent normal r.v.'s

-

\(X \sim N(\mu_x, \sigma_x^2), Y \sim N(\mu_y, \sigma_y^2\) Independent

\(Z = X + Y: \sim N(N(\mu_x + \mu_y, \sigma_x^2 + \sigma_y^2))\)

The sum of finitely many independent normals is normal

6.3 Covariance (协方差)

6.3.1 Definition

\[

cov(X,Y) = E[(X - E[X]) \cdot (Y - E(Y))]

\]

- If \(X,Y\) independent: \(cov(X,Y) = 0 \)

convers is not true!

6.3.2 Covariance properties

-

\(cov(X,X) = var(X) = E[X^2] - (E[X])^2\)

-

\(cov(aX+b,Y) = a \cdot cov(X,Y)\)

-

\(cov(X,Y+Z) = cov(X,Y) + cov(X,Z)\)

Practical covariance formula:

\[ cov(X,Y) = E[XY] - E[X]E[Y] \]

6.3.3 The variance of a sum of random variables

-

two r.v.s

\[ var(X_1 + X_2) = var(X_1) + var(X_2) + 2cov(X_1,X_2)

\]X,Y indepedent, then \(var(X_1 + X_2) = var(X_1) + var(X_2)\)

-

multiple r.v.s

\[ var(X_1 + \dots + X_n) = \sum\limits_{i=1}^nvar(X_i) + \sum\limits_{(i,j):i \neq j}^n cov(X_i,X_j)

\]\(\sum\limits_{(i,j):i \neq j}^n \) contains \((n^2 - n)\) terms

6.4 The correlation coefficient

\[ \rho(X,Y) = E\left[\frac{(X - E[X])}{\sigma_X} \cdot \frac{(Y - E[Y])}{\sigma_Y}\right] = \frac{cov(X,Y)}{\sigma_X \sigma_Y} \]

6.4.1 Interpretation of correlation coeffecient

-

Dimensionless version of covariance

-

Measure of the defree of "association" between X and Y

-

Association does not imply causation or influence

-

Correlation often refleces underlying, common, hidden factor

6.4.2 Key properties of the correlation coeffecient

-

\(-1 \leq \rho \leq 1\)

-

Independent \(\implies \rho = 0\) "uncorrelated" (converse is not true)

-

\(|\rho| = 1 \Leftrightarrow\) linearly related

-

\(cov(aX+b, Y) = a \cdot cov(X,Y) \implies \rho(aX+b,Y) = sigma(a)\rho(X,Y)\)

6.5 Conditional expectation and variance as a random variable

6.5.1 Conditional expecation

- Definition: \(g(Y)\) is the random variable that takes the value \(E[X|Y=y]\), if \(Y\) happens to take the value \(y\).

\[

E[X|Y] = g(Y)

\]

- Law of iterated expectations

\[

E[E[X|Y]] = E[g(Y)] = E[X]

\]

6.5.2 Conditional variance

-

Variance fundamentals

\[ var(X) = E[(X - E[X])^2] \\ var(X|Y=y) = E[(X - E[X|Y=y])^2|Y=y] \]

var(X|Y) is the r.v. that takes the value var(X|Y=y), when Y=y

-

Law of total variance

\[ var(X) = E[var(X|Y)] + var(E[X|Y])

\]var(X) = (average variability within sections) + (variability between sections)

6.6 Sum a random number of indepedent r.v.'s

Example of shopping

-

N: number of stores visited (N is a nonnegative integer r.v.)

-

\(X_i\): money spent in store i

-

\(X_i\) independent, identically distributed

-

independent of N

-

-

Let \(Y = X_1 + \dots + X_N\)

6.6.1 Expecatation of the sum

Based on the Law of iterated expectations:

\[

E[Y] = E[E[Y|N]] = E[N \cdot E[X]] = \cdot E[X]E[N]

\]

6.6.2 Variance of the sum

Based on the Law of total variance: \(var(Y) = E[var(Y|N)] + var(E[Y|N])\):

\[ var(Y) = E[N]var(X) + (E[X])^2var(N) \]

Unit 7 Bayesian inferences

7.1 Introduction to Bayesian inference

7.1.1 Basic concepts

-

Model building versus inferring unobserved variables

\[X = aS + W\]

S: signal; W: noise; a: medium (image a black box where S goes through and output X with W as noise)

-

Model building: known signal S, observe X -> infer a

-

Variable estimation: known a, observe X -> infer S

-

-

Hypothesis testing vs. estimation

-

Hypothesis testing

-

unknown takes one of few possible values

-

aim at small probability of incorrect decision

-

-

Estimation

-

numerical unknown(s)

-

aim at an estimate that is "close" to the true but unknown value

-

-

7.1.2 The Bayescian inference framework

-

Unknown \(\Theta\) - treated as a random variable prior distribution: \(p_{\Theta}\) or \(f_{\Theta}\)

-

Observation \(X\) - observation model \(p_{X|\Theta}\) or \(f_{X|\Theta}\)

-

Use appropriate version of the Bayes rule to find \(p_{X|\Theta}(\cdot | X = x)\) or \(f_{X|\Theta} (\cdot| X = x)\)

-

The output of Bayesian inference - posterior distribution

-

Maximum a posterior probability (MAP):

\(p_{\Theta|X}(\theta^*|x) = \max\limits_{\theta} p_{\Theta|X}(\theta|x)\)

\(f_{\Theta|X}(\theta^*|x) = \max\limits_{\theta} f_{\Theta|X}(\theta|x)\)

-

Conditional expectation: \(E[\Theta|X = x]\) Least Mean Square (LMS)

-

estimate: \(\hat{\theta} = g(x)\) (number)

-

estimator: \(\hat{\Theta} = g(X)\) (random variable)

-

7.1.3 Four cases

-

Discrete \(\Theta\), discrete X

- values of \(\Theta\): alternative hypotheses

\[ p_{\Theta|X}(\theta|x) = \frac{p_{\Theta}(\theta)p_{X|\Theta}(x|\theta)}{p_X(x)}

\]\[ p_X(x) = \sum\limits_{\theta'}p_{\Theta}(\theta')p_{X|\Theta}(x|\theta') \]

- conditional prob of error: Smallest under the MAP rule

\\[ P(\hat{\theta} \neq \Theta|X = x) \\]- overal probability of error:

\\[ P(\hat{\Theta} \neq \Theta) = \sum\limits_{x} P(\hat{\Theta} \neq \Theta|X = x)p_X(x) = \sum\limits_{\theta}P(\hat{\Theta} \neq \Theta|\Theta = \theta)p_{\Theta}(\theta) \\] -

Discrete \(\Theta\), Continuous X

\[ p_{\Theta|X}(\theta|x) = \frac{p_{\Theta}(\theta)f_{X|\Theta}(x|\theta)}{f_X(x)}

\]\[ f_X(x) = \sum\limits_{\theta'}p_{\Theta}(\theta')f_{x|\Theta}(x|\theta') \]

-

the same equation for conditional prob. of error

-

overall probability of error

\[ P(\hat{\Theta} \neq \Theta) = \int\limits_{x} P(\hat{\Theta} \neq \Theta|X = x)f_X(x)dx = \sum\limits_{\theta}P(\hat{\Theta} \neq \Theta|\Theta = \theta)p_{\Theta}(\theta) \]

-

-

Continuous \(\Theta\), Discrete X

\[ f_{\Theta|X}(\theta|x) = \frac{p_{\Theta}(\theta)p_{X|\Theta}(x|\theta)}{p_X(x)}

\]\[ p_X(x) = \int\limits_{\theta'}f_{\Theta}(\theta')p_{x|\Theta}(x|\theta')d\theta' \]

- Inferring the unknown bias of a coin and the Beta distribution

-

Continuous \(\Theta\), Continuous X

\[ f_{\Theta|X}(\theta|x) = \frac{f_{\Theta}(\theta)p_{X|\Theta}(x|\theta)}{p_X(x)}

\]\[ f_X(x) = \int\limits_{\theta'}f_{\Theta}(\theta')p_{x|\Theta}(x|\theta')d\theta' \]

-

Linear normal models: estimation of a noisy singal

-

Estimating the parameter of a uniform

\(X\): uniform \([0, \Theta]\)

\(\Theta\): uniform \([0, 1]\)

-

Performance evaluation of an estimator \(\hat{\Theta}\)

\(E[(\hat{\Theta} - \Theta)^2|X = x]\)

\(E[(\hat{\Theta} - \Theta)^2]\)

-

Useful equation:

\[

\int_0^1 \theta^\alpha(1-\theta)^\beta d\theta = \frac{\alpha!\beta!}{(\alpha + \beta + 1)!}

\]

7.2 Linear models with normal noise

7.2.1 Recognizing normal PDFs

-

Normal distribution: \(X \sim N(\mu, \sigma^2)\)

\(f_X(x) = \frac{1}{\sigma\sqrt{2\pi}} e^{-(x-\mu)^2/2\sigma^2}\)

-

\(f_X(x) = c e^{-(\alpha x^2 + \beta x + \gamma)}\), \(\alpha > 0\) Normal with mean \(-\beta/2\alpha\) and variance \(-1/2\alpha\)

7.2.2 Estimating a normal random variable in the presence of additive normal noise

\(X = \Theta + W\), \(\Theta, W,N :(0,1), independent\)

-

\( \hat{\theta} _{MAP} = \hat{\theta} _{LMS} = E[\Theta|X = x] = x/2\)

-

even with general means and variances:

-

posterior is normal

-

LMS and MAP estimators conincide

-

these estimators are "linear" of the form \(\hat{\Theta} = aX + b\)

-